Learn Pandas Part 2 - Reading CSV Files

In this post we learn the basics of reading CSV files using pandas

Overview

Pandas is a container for rectangular data but it is astonishingly flexible in what you can put inside.

You can put images inside a dataframe - if you conceive an image being a rectangular block of numbers. You can read a word document into a pandas dataframe - with each paragraph occupying a cell. Even HTML can be read into a dataframe - or at least HTML tables - which are extremely common. And with this flexibility in what a dataframe can contain also comes severel ways to get data inside.

Naturally we start with import pandas as pd

import pandas as pd

[a for a in dir(pd) if a.startswith('read')]

Reading CSVs

99% of the time I use pd.read_csv since it 99% of the time you are doing a data science project the data is in a comma separated value file. I say 99%, mainly to make a point, but this holds for projects that are shared via the web. Inside companies, CSVs are still popular, but you are also more likely to find that data is in databases or json files or excel sheets. Nevertheless, CSVs are very commonly used, and so pd.read_csv is your go to means of reading data.

pd.read_csv is a pretty versatile function, and that versatility comes from being based on an even more versatile function pd.read_table. Mostly, you can use either pd.read_csv or pd.read_table interchangeably, except that pd.read_csv is more focused on CSVs.

Reading a CSV File

Firstly, d.read_csv works identically on a local file as it does with a url. To illustrate, we found a CSV file on Data.gov about runways in Notrh Dakota, and we can load it directly with the url, or with the local file after downloading. Therefore - these are file paths are identical.

runways_in_north_dakota_url = 'https://gishubdata.nd.gov/sites/default/files/NDHUB.AirportRunways.csv'

runways_in_north_dakota_file = 'data/pandas/NDHUB.AirportRunways.csv'

Now we can read the data into a dataframe and then display it

nd_runways = pd.read_csv(runways_in_north_dakota_url)

nd_runways

So now you can see the value and the power of using pandas for reading csvs - with just a line of code. And since there is a a lot of valuable data available in CSVs, knowing how to read this into pandas for processing is a valuable skill indeed.

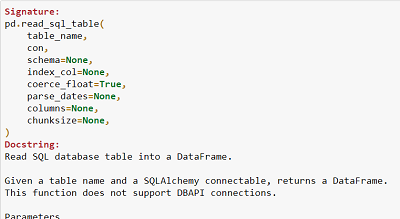

CSV data can get pretty varied you will need to know how to use some of the parameters of read_csv depending on the data you are reading. As noted before, you can use ? at the end of a function in a Jupyter notebook to display documentation. Here we do something slightly different - we want to load that documentation into a pandas DataFrame and display it. We will use pd.read_csv.__doc__ to get the documentation.

Tip: You can use __doc__ to access the documentation for a python function or object

Pandas Read CSV Documentation

The function documentation isn't exactly rectangular data - but a data scientist takes data from one shape to another. The code gets the docstring, does some stripping and splitting until we have a below does this.

Note: this code won't work for all docstring since the format varies but it works for this function

read_csv_parameters = pd.DataFrame( # Create a Dataframe

[line.split(':') for line # For every line split each by colon

in pd.read_csv.__doc__.split('\n') # Get the docstring and split into lines

if ' :' in line # Keep if " :" is in the line

and not line.startswith(' ') # And there is no leading spaces

and ',' in line], # And there is a comma in the line

columns=['parameter','description'] # Set the column names

).applymap(lambda x: x.strip()) # Strip all spaces from the dataframe

Concepts covered in the code above

- list comprehensions

- filters in list comprehensions

- Splitting strings

- Creating a dataframe from a list of lists

- Using

applymapto strip spaces from all cells of a dataframe

So now we have the dataframe, the code below makes it look a little nicer by using the dataframe styler. I use it to highlight the parameters that I use, depending on the need.

Tip: learn how to use dataframe styling .. you'll probably get paid more :-)

params_to_highlight = ['filepath_or_buffer','delimiter', 'usecols','dtype',

'sep', 'nrows','skiprows', 'low_memory', 'names']

def highight_parameter(param):

is_key = param.isin(params_to_highlight)

return ['background-color: #a7b9f1; font-weight:bold' if v else '' for v in is_key]

read_csv_parameters.style.hide_index().apply(highight_parameter, axis=1)

Common Parameters

| parameter | description |

|---|---|

| filepath_or_buffer | The name or url of the file. You always need this |

| sep | What separates the cells, if not "," |

| delimiter | What separates the cells, if not ",". Alias for sep |

| names | The column names to use for all the columns in the CSV |

| usecols | Which of the columns to use |

| dtype | Use a dict to pass in the datatypes for each column. Pretty useful |

| skiprows | How many rows to skip at the start of the file |

| nrows | How many rows to use in total |

| low_memory | If the file is large, an you have lots of memory, use low_memory=False |

Conclusion

This was a brief walk through pd.read_csv. which we saw is pretty useful for reading data into pandas from CSVs. We looked at how to get public data to practice reading CSVs and also how to use teh documentation to learn more.

This post is part of a series. You might also be interested in